What are MCP Servers and Why People are Crazy About It?

It took me way longer than I’d like to admit to wrap my head around MCP servers.

At first glance, they sound like just another protocol in the never-ending parade of tech buzzwords decorated alongside AI.

But trust me, once you understand what they are, you start to see why people are obsessed with them.

This post isn’t meant to be the ultimate deep dive (I’ll link to some great resources for that at the end). Instead, consider it just a lil introduction or a starter on MCP servers.

And no, I’m not going to explain MCP using USB-C as a metaphor, if you get that joke, congrats, you’ve clearly been Googling around like the rest of us. If not… well, give it time. 😛

What even is an MCP Server?

MCP stands for Model Context Protocol, an open standard introduced by Anthropic in November 2024.

Its purpose is to improve how AI models interact with external systems, not by modifying the models themselves, but by providing them structured, secure access to real-world data, tools, and services.

An MCP server is a standalone service that exposes specific capabilities such as reading files, querying databases, invoking APIs, or offering reusable prompts, in a standardized format that AI models can understand.

Rather than building custom integrations for every individual data source or tool, developers can implement MCP servers that conform to a shared protocol.

This eliminates the need for repetitive boilerplate and reduces complexity in AI applications.

What can an MCP Server actually do?

Quite a bit. Depending on how they’re set up, MCP servers can expose:

- Resources – Stuff like files, documents, or database queries that an AI can read.

- Tools – Actions like sending an email, creating a GitHub issue, or checking the weather.

- Prompts – Predefined instructions or templates that guide AI behavior in repeatable ways.

Each of these is exposed through a JSON-RPC 2.0 interface, meaning AI clients can query what's available, call the appropriate function, and get clean, structured responses.https://www.anthropic.com/

So... how does an MCP server actually work?

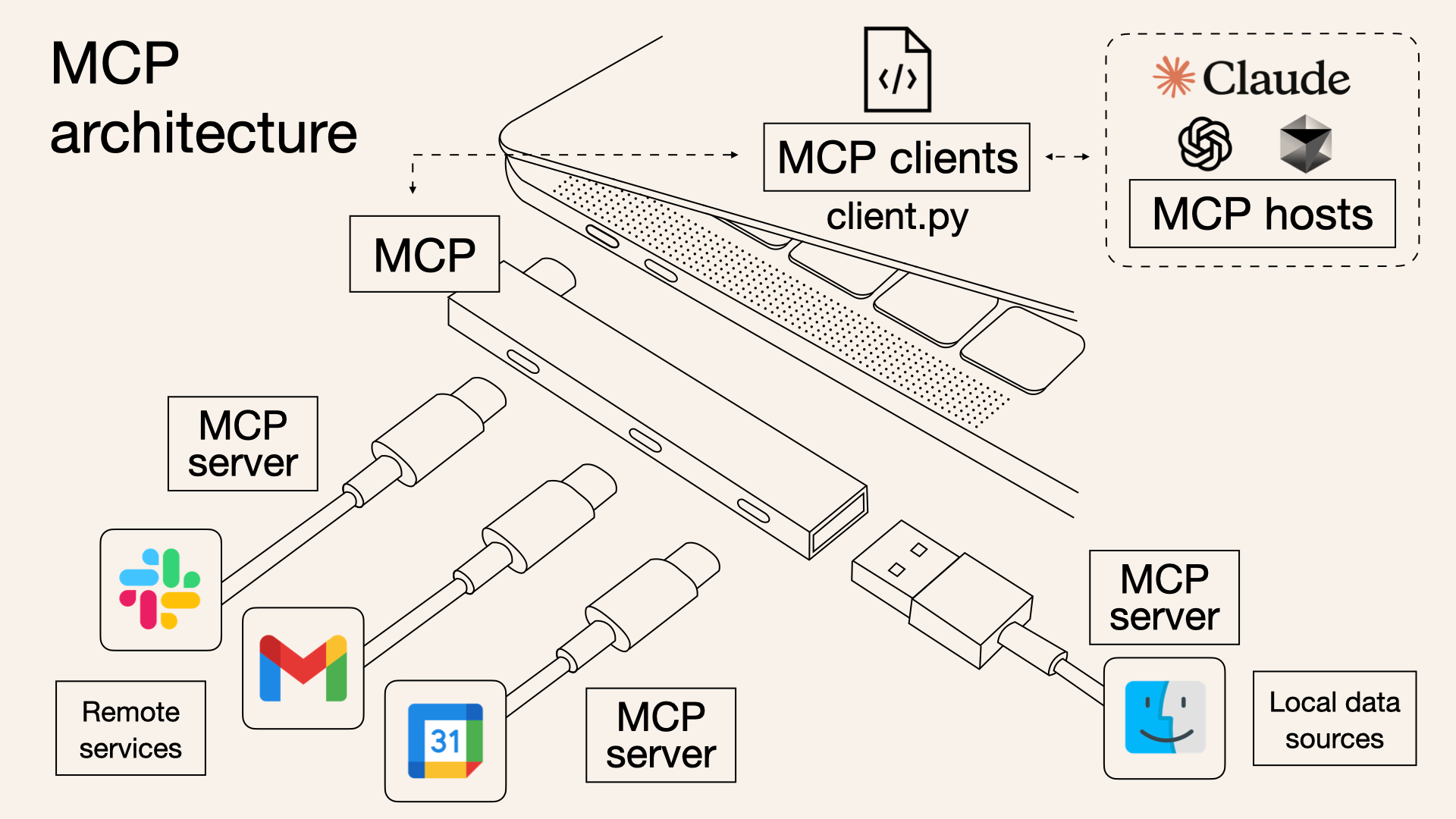

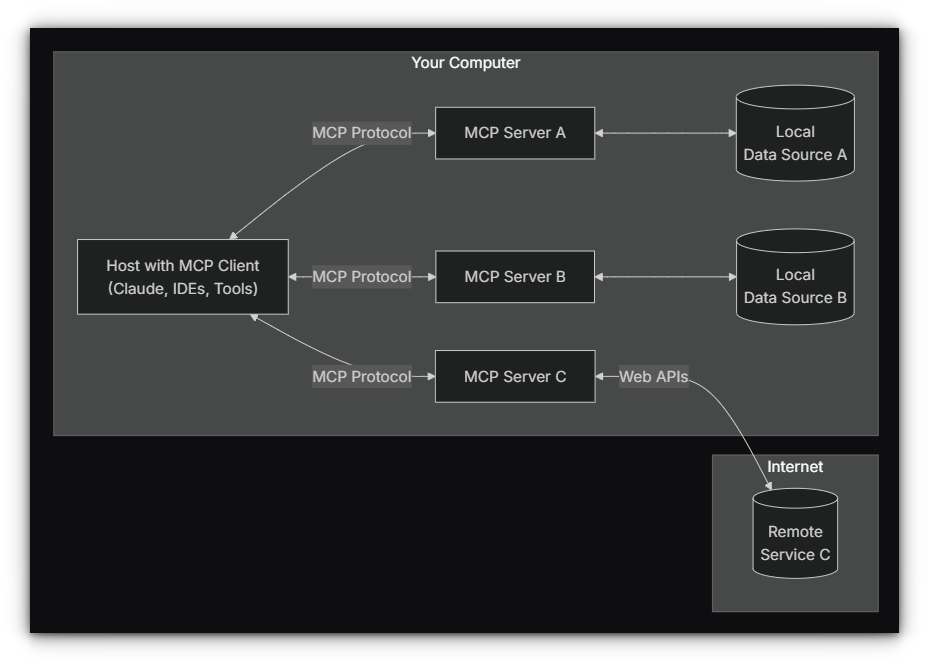

MCP servers follow a well-defined architecture intended to standardize how AI models access external tools, data, and services.

Each part of the system has a clear role, contributing to a modular and scalable environment for AI integration.

- Host Applications

These are the environments where AI agents operate, such as coding assistants, desktop apps, or conversational UIs.They don’t interact with external systems directly, but instead rely on MCP clients to broker those connections.

- MCP Clients

The client is responsible for managing the connection between the AI agent and the MCP server. It handles protocol-level tasks like capability discovery, permissions, and communication state.Clients maintain direct, persistent connections to the server, ensuring requests and responses are handled correctly.

- MCP Servers

The server exposes defined capabilities such as reading files, executing functions, or retrieving documents using the Model Context Protocol.Each server is configured to present these capabilities in a standardized format that AI models can interpret without needing custom integration logic.

- Underlying Data or Tooling

This includes everything the server is connected to: file systems, databases, external APIs, or internal services.The server mediates access, applying permission controls, formatting responses, and exposing only what the client is authorized to use.

This separation of roles between the model host, client, server, and data source, allows AI applications to scale and interoperate cleanly.

Developers can focus on defining useful capabilities inside a server, knowing that any MCP-compatible client can access them predictably and securely.

Wait, so how are MCP Servers different from APIs?

Fair question. It might sound like MCP is just a fancy wrapper around regular APIs, but there are key differences:

| Feature | Traditional API | MCP Server |

|---|---|---|

| Purpose | General software communication | Feed AI models with data, tools, or prompts |

| Interaction | Requires manual integration and parsing | Presents info in model-friendly format |

| Standardization | Varies wildly per service | Unified protocol (MCP) |

| Security | Must be implemented case-by-case | Built-in controls and isolation |

| Use Case | Backend services, apps, etc. | Enhancing AI agents like Claude or Copilot or Cursor |

Basically, APIs were made for apps. MCP servers were made for AI.

Want to spin up your own self-hosted MCP Server?

While building a custom MCP server from scratch is entirely possible, you don’t have to start there.

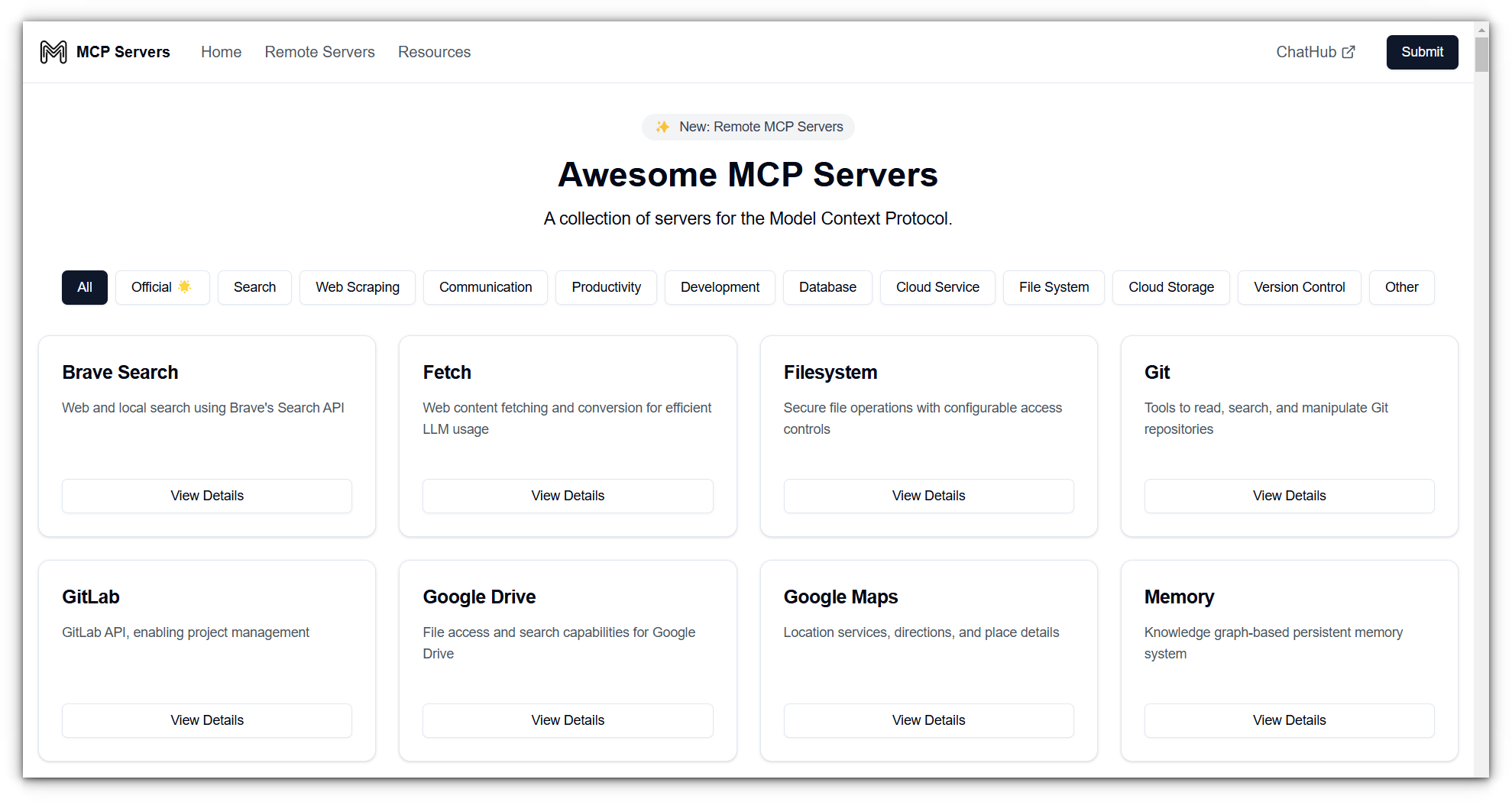

There’s already a growing list of open-source MCP servers you can clone, deploy, and start testing with your preferred AI assistant like Claude, Cursor, or others.

If you're interested in writing your own server or extending an existing one, stay tuned. We’re covering that in a dedicated upcoming post, we'll walk through the process step by step in an upcoming post, using the official Python SDK.

Make sure you’re following or better yet, subscribe, so you don’t miss it.

Want to learn more on MCP?

Here are a few great places to start:

I personally found this a good introduction to MCP Servers

- How I Finally Understood MCP — and Got It Working in Real Life - towards data science

- What are MCP Servers And Why It Changes Everything - Huggingface

Conclusion

And there you have it, a foundational understanding of what MCP servers are, what they can do, and why they’re quickly becoming a cornerstone in the evolving landscape of AI.

We’ve only just scratched the surface, but hopefully, this introduction has demystified some of the initial complexities and highlighted the immense potential these servers hold for building more robust, secure, and integrated AI applications.

Stay tuned for our next deep dive, where we’ll try and build an MCP server and a client from scratch with the Python SDK. Because really, the best way to learn is to get your hands dirty.

Until then, happy hacking. 🧛