How to Configure Max Iteration Behavior with OpenAI Multi-Function Agents?

Quick Outline

This post will demonstrate the following:

How to Configure Max Iteration Behavior With OpenAI Multi-Function Agents

- Installing Frameworks

- Importing Libraries

- Building Language Model

- Testing the Model

- Configuring Max Iteration Behavior

How to Configure Max Iteration Behavior With OpenAI Multi-Function Agents?

With the performance of the model and its ability to understand the query, another concern is that the model should not enter the infinity loop. It means that the model should be evaluated at some point so that the performance of the model is not compromised and the resources are not wasted at the same time.

To learn the process of configuring max iteration behavior with the OpenAI function in LangChain, simply follow this guide:

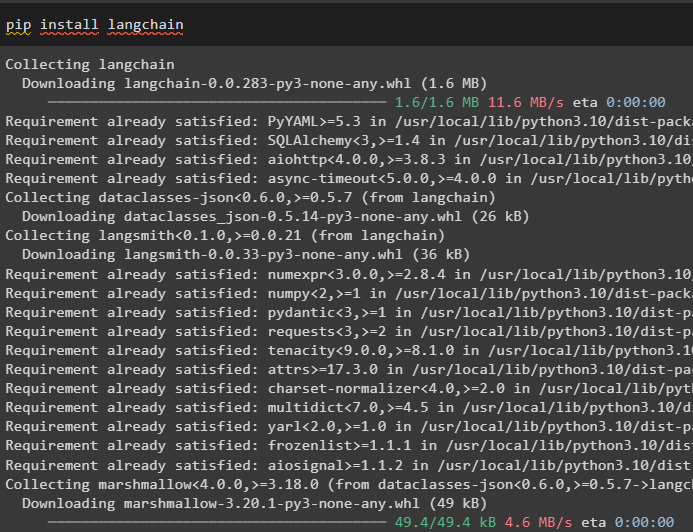

Step 1: Installing Frameworks

First of all, start the process by installing the most important framework that is LangChain using the pip command in the Python notebook:

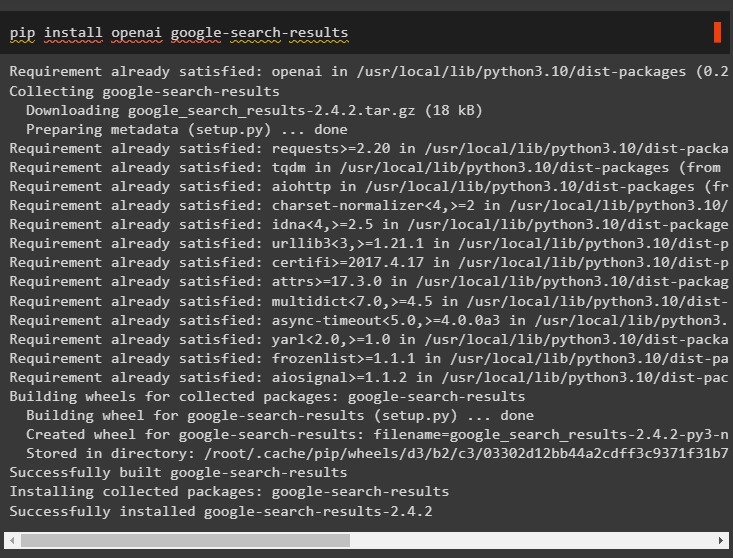

After installing the LangChain framework, simply install the google-search-results module of the OpenAI to get the multi-function agent:

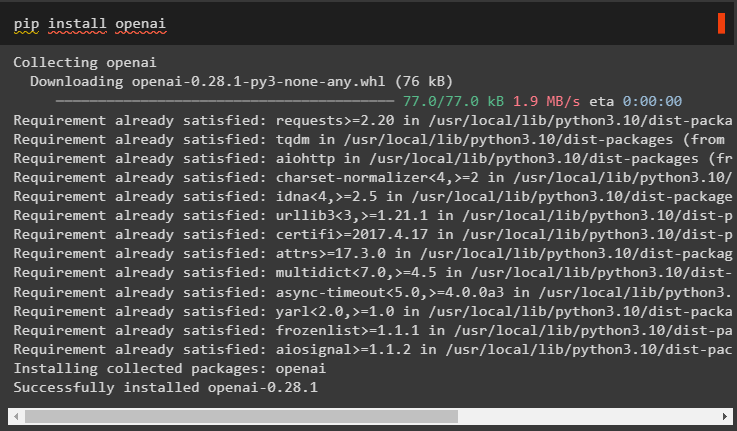

Now, another module to install is OpenAI which can be used to get its dependencies for building language models:

Now, simply set up the environment for the OpenAI and SerpApi by providing their API key after executing the following command:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

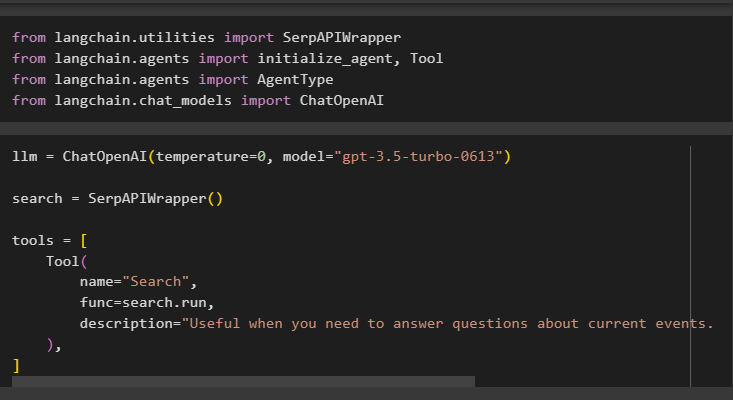

Step 2: Importing Libraries

Head into the next phase of the process by importing the required libraries for configuring the OpenAI multi-function agents:

from langchain.agents import initialize_agent, Tool

from langchain.agents import AgentType

from langchain.chat_models import ChatOpenAI

Step 3: Building Language Model

Build the language model using the ChatOpenAI() method with its arguments to configure the value of the model and its working. Setup the tools for using the SerpAPIWrapper() method and tools required for using the OpenAI agents:

search = SerpAPIWrapper()

tools = [

Tool(

name="Search",

func=search.run,

description="Ask the targeted queries about the current events to get proper responses",

),

]

Configuring the agents using arguments like tools, llm, and the value of the agent which was not configured earlier and the verbose to make the output more human-friendly:

tools, llm, agent=AgentType.OPENAI_MULTI_FUNCTIONS, verbose=True

)

Setting the Debug flag of the LangChain True will allow backup support in case of any failure while executing the agent. As the model execution fails, it will generate the output they gathered until that point based on the provided input, and the user won’t face any error message:

langchain.debug = True

Step 4: Testing the Model

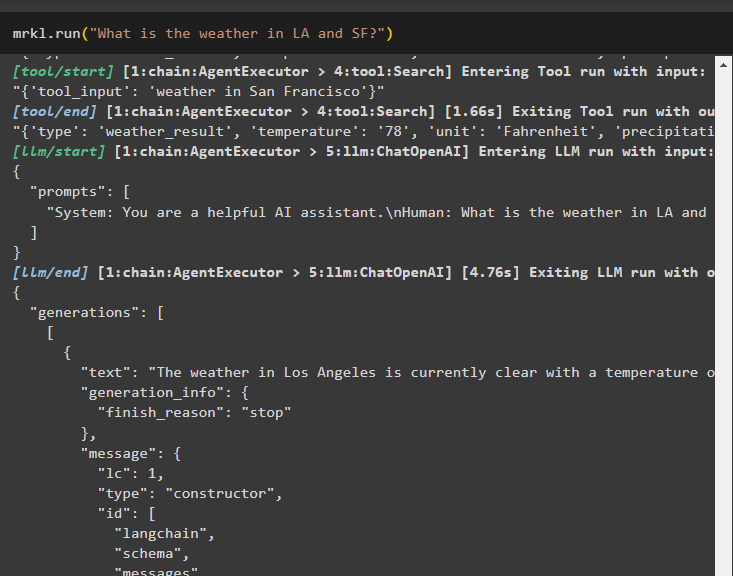

Simply test the “mrkl” variable that was configured to invoke the agent using the input string in the argument of the run() method:

The output has been displayed in the following screenshot and the max iterations were not restricted at this point:

Step 5: Configuring Max Iteration Behavior

Here, the code updates the “mrkl” variable by adding the max_iteration argument to set its value, and the model will only take a limited number of iterations to generate the output:

tools,

llm,

agent=AgentType.OPENAI_FUNCTIONS,

verbose=True,

max_iterations=2,

early_stopping_method="generate",

)

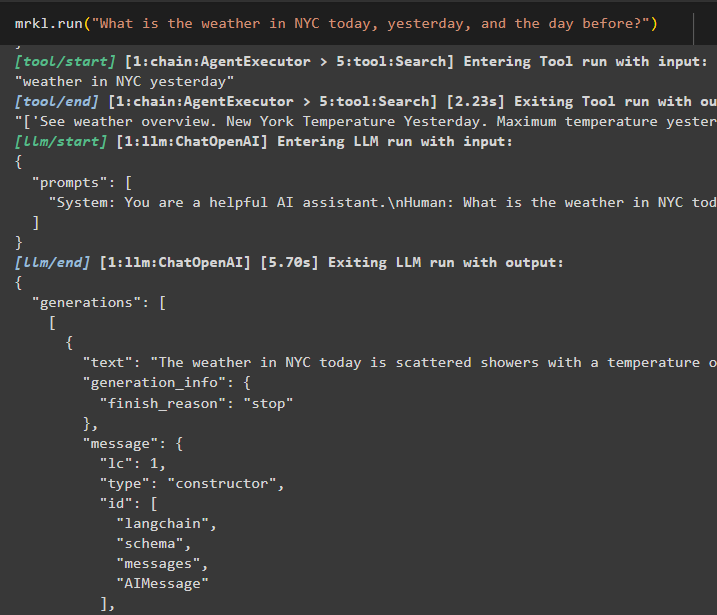

Run the agent once more and the model will only take two iterations before extracting the output on the screen:

Executing the above code will generate the answer containing the weather report for New York City:

That’s all about configuring max iteration behavior with multi-function agents in LangChain.

Conclusion

To configure the max iteration behavior with OpenAI multi-function agents in LangChain, simply install the modules for configuring the agent. After that, set up the environment using the OpenAI and SerpAPi credentials to build the language model. The user can limit the max iteration behavior of the model by integrating it into the argument while initializing the agent and then running it. This guide has elaborated on the process of configuring the max iteration behavior with OpenAI multi-function agents in LangChain.

Source: linuxhint.com