How to Add Memory to an Agent in LangChain?

Quick Outline

This post will demonstrate:

How to Add Memory to an Agent in LangChain

- Install Modules

- Setting up SerpAPI and OpenAI

- Importing Libraries From LangChain

- Configure Prompt Template and Memory

- Testing Chain

- Agent Without a Memory

How to Add Memory to an Agent in LangChain?

Adding memory to the agents allows them to understand the query better by using the stored observations as the context of the chat. While understanding the context of the message, the machines can get lost in the use of parts of speech like nouns and pronouns. Co-reference is the process used in NLP application to understand the pronoun’s relation to the correct noun. These links can be understood or managed easily using the observations stored in the memory.

To learn the process of adding memory to the agent in LangChain, simply follow this guide:

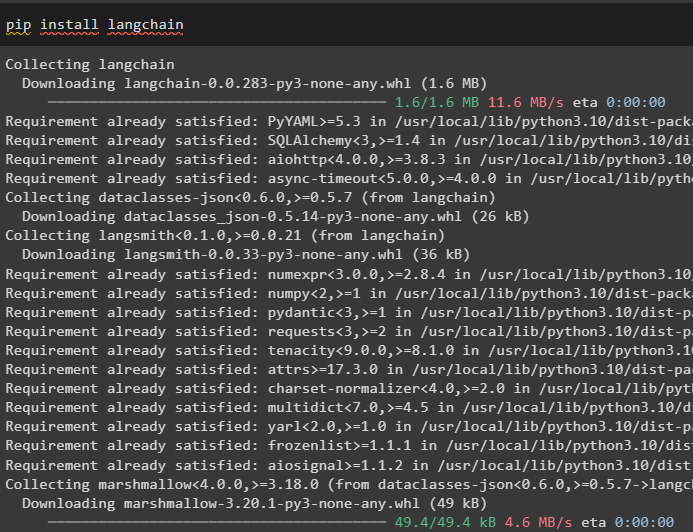

Step 1: Install Modules

Start the process by installing the LangChain module/framework to get the required libraries for completing the process:

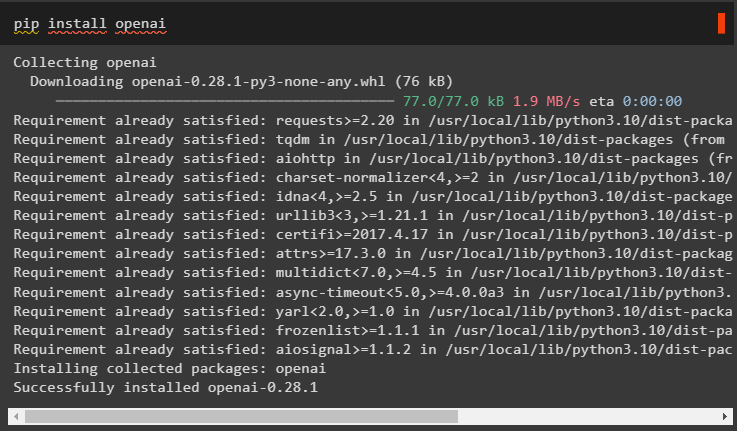

Install the OpenAI module for building LLMs or chatbots using the “pip” command in the Python Notebook. OpenAI is the environment for research and technology that enables developers to build Large Language Models solving many Natural Language Processing problems:

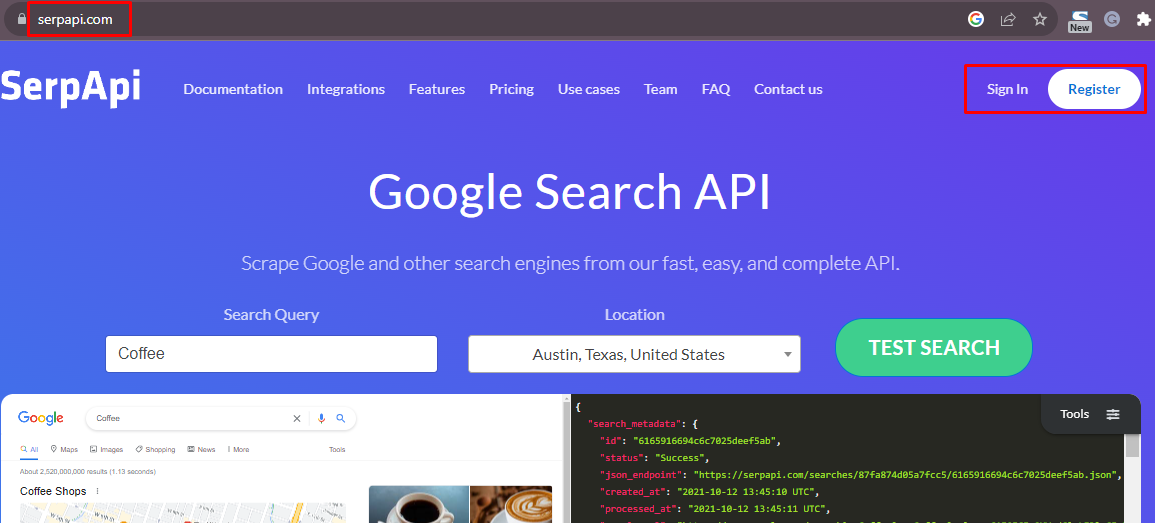

Step 2: Setting up SerpAPI and OpenAI

The agent we are using in this guide will be the Google Search API or SerpAPI which can be used to search the queries from the internet. The user needs to get the API key from the account by signing in to it or Registering a new one from the top right corner:

After signing in to the account, simply head to the API Key page from the left navigation bar to get your own private API key:

Setup the environment for OpenAI and SerpAPI by providing their keys from their respective accounts:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Step 3: Importing Libraries From LangChain

Once the environment is set up for building the agent in LangChain, simply use the module to import the libraries for using the agent. Import the ConversationBufferMemory library from the LangChain’s memory dependency to add it to the agent to understand the chat more easily:

from langchain.utilities import SerpAPIWrapper

from langchain.agents import Tool

from langchain.agents import AgentExecutor

from langchain.memory import ConversationBufferMemory

#importing libraries for building large language model or chatbot

from langchain.llms import OpenAI

from langchain.chains import LLMChain

Configure the agent by assigning it to the search variable and then provide the details for the tools that can be used by the search agent:

tools = [

Tool(

name="Search",

func=search.run,

description="You are a chatbot that can answer questions about specific events",

)

]

Step 4: Configure Prompt Template and Memory

After building the agent, simply configure the prompt template to give the structure of the chat and add the memory in the agent using the “memory_key” as the argument:

suffix = """Begin!"

{history}

Query: {input}

{agent_scratchpad}"""

#configure the structure for the interface of the chat

prompt = ZeroShotAgent.create_prompt(

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "history", "agent_scratchpad"],

)

memory = ConversationBufferMemory(memory_key="history")

Build the chains using the LLMs by calling the configured prompt template and the agent with its tools. This is the part where each component that was configured previously, comes together and works with each other to get maximum efficiency:

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_chain = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True, memory=memory

)

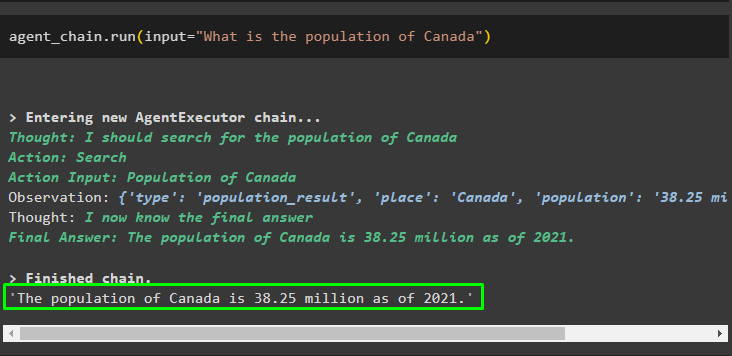

Step 5: Testing Chain

Once everything is set to use the memory with an agent in the chat model, simply test it by running the agent using the input variable to give the command:

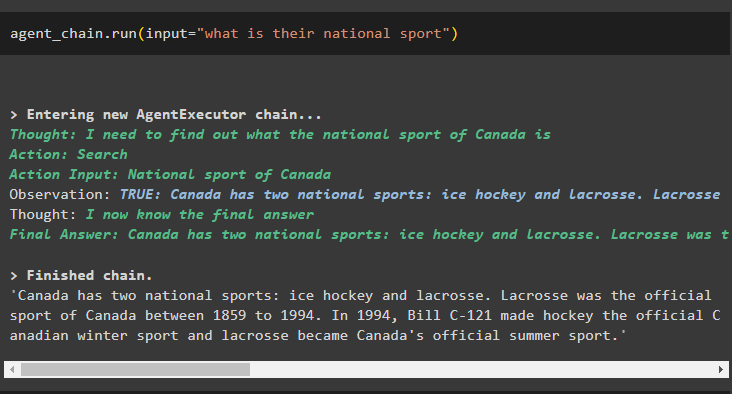

Ask another question but without specifying the name of the country to check whether the agent can get it from the previous message stored in the memory:

The memory has worked as the output gives the national sport for Canada, whereas the current input hasn’t mentioned the name of the country. So the model has used the chat stored in the memory to get the reference from the name of the country provided in the previous prompt:

Step 6: Agent Without a Memory

Let’s configure the agent without the additional memory(to store the previous chat message) by setting up the prompt template and agent to be used with the LLM:

suffix = """Begin!"

Question: {input}

{agent_scratchpad}"""

#configure the structure for the interface of the chat

prompt = ZeroShotAgent.create_prompt(

tools, prefix=prefix, suffix=suffix, input_variables=["input", "agent_scratchpad"]

)

#configure the language model and agent using LLMChain

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

#configure the agent that will not use the additional memory for context

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_without_memory = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True

)

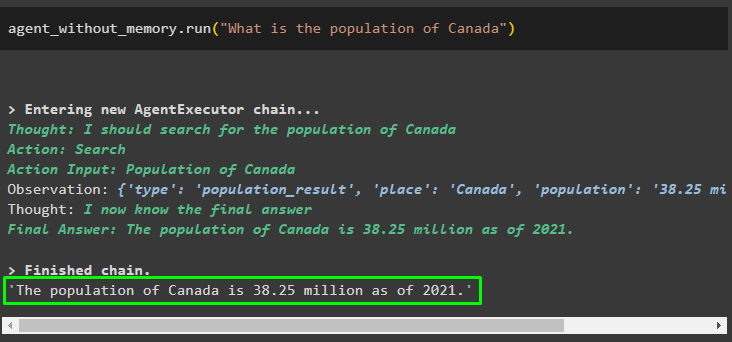

Now, run the agent without the memory to get the output using the agent, but the messages are not stored in the memory:

The agent produces the answer for the prompt provided while running it without the memory:

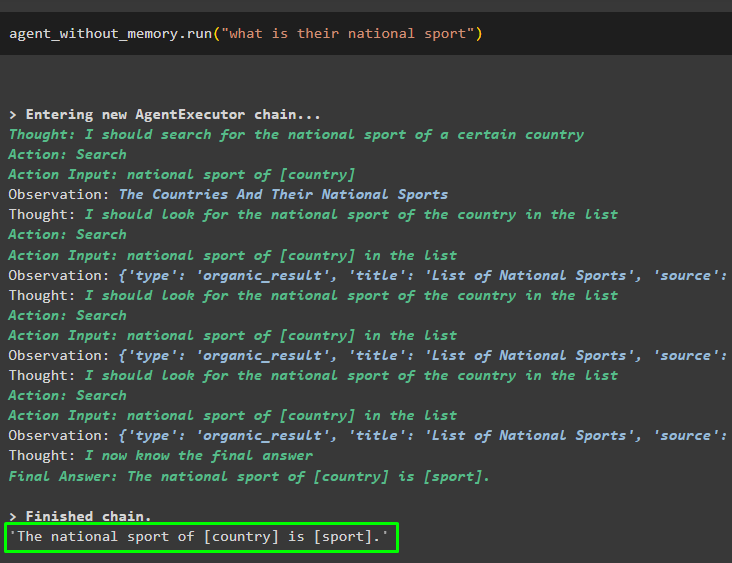

Run the agent by providing an ambiguous command without giving the complete information in the input:

The agent couldn’t identify the name of the country from the previous input as it wasn’t using the memory containing the previous information:

That’s all about adding memory in the agent using the LangChain framework.

Conclusion

To add memory in an agent using the LangChain framework, install the required modules and set up the environments for OpenAI and SerpAPI. The user needs to get the API key from the SerpAPI by signing in to the account and extracting the API key from its page. Adding the memory after setting up the prompt template for the model, simply test it by giving the input that can be stored in the memory. After that, enter an incomplete or ambiguous input while running the agent so it can identify the context itself.

Source: linuxhint.com